Networld building - Building out enterprise grade network & services on homelab

Note: The following project started out as a result of my goal to learn the system administration & networking portion of IT in an enterprise context. Setting up an internal home network allowed my to gain a lot of insights on setting things up decisively as I got to build completely everything from the ground up with my own judgement.

This post will outline a series of steps that can be used to setup an enterprise grade home network using basic consumer/prosumer hardware devices and open source softwares. The aim is to hopefully help enthusiasts with similar mindset jump start their own homelab project. A basic working knowledge of the various layers of the Network OSI model is expected from the readers.

My home network was setup in the following three execution portion in various parts of stack:

Section B: Infrastructure & Services Setup

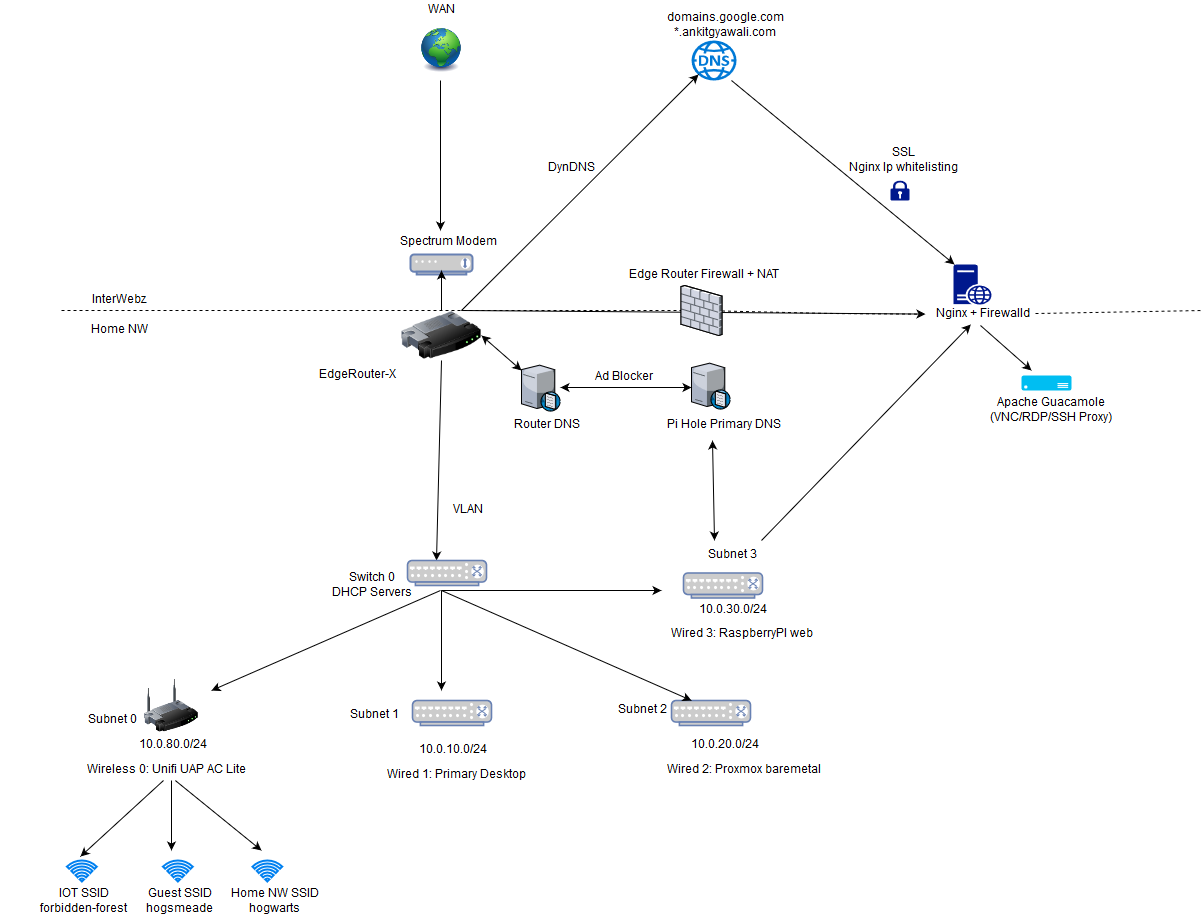

Section A: Network Setup

Devices:

My network setup process started with purchase and configuration of following networking items:

1x EdgeRouter X- The functionality this little device provides for just little over 50 bucks is ridiculously awesome. Other options I considered were USG from Ubiquiti and virtualized pfSense. Interestingly, unlike regular consumer routers this router does not come with a dedicated wireless antenna. While regular market routers can act as all router + switch + wifi device, they are a lot less configurable than Edgerouter and the functionalities provided by EdgeOS.

1x UAP AC US - This currently acts as my Wireless AP device, also made by Ubiquiti like Edgerouter. Besides being managed separately from the main routing device, and providing advanced networking functionalities these devices can potentially be chained with other AP ACs.

5x RJ 45 ethernet Cables - A pack of AmazonBasics ethernet cables to utilize the POE port on edgerouter for AP AC and my regular devices. My primary workstation & servers needed wired connections to fully avoid any bottlenecks on network I/O. With this setups my internal network throughput is be good enough to handle extra client devices.

Execution:

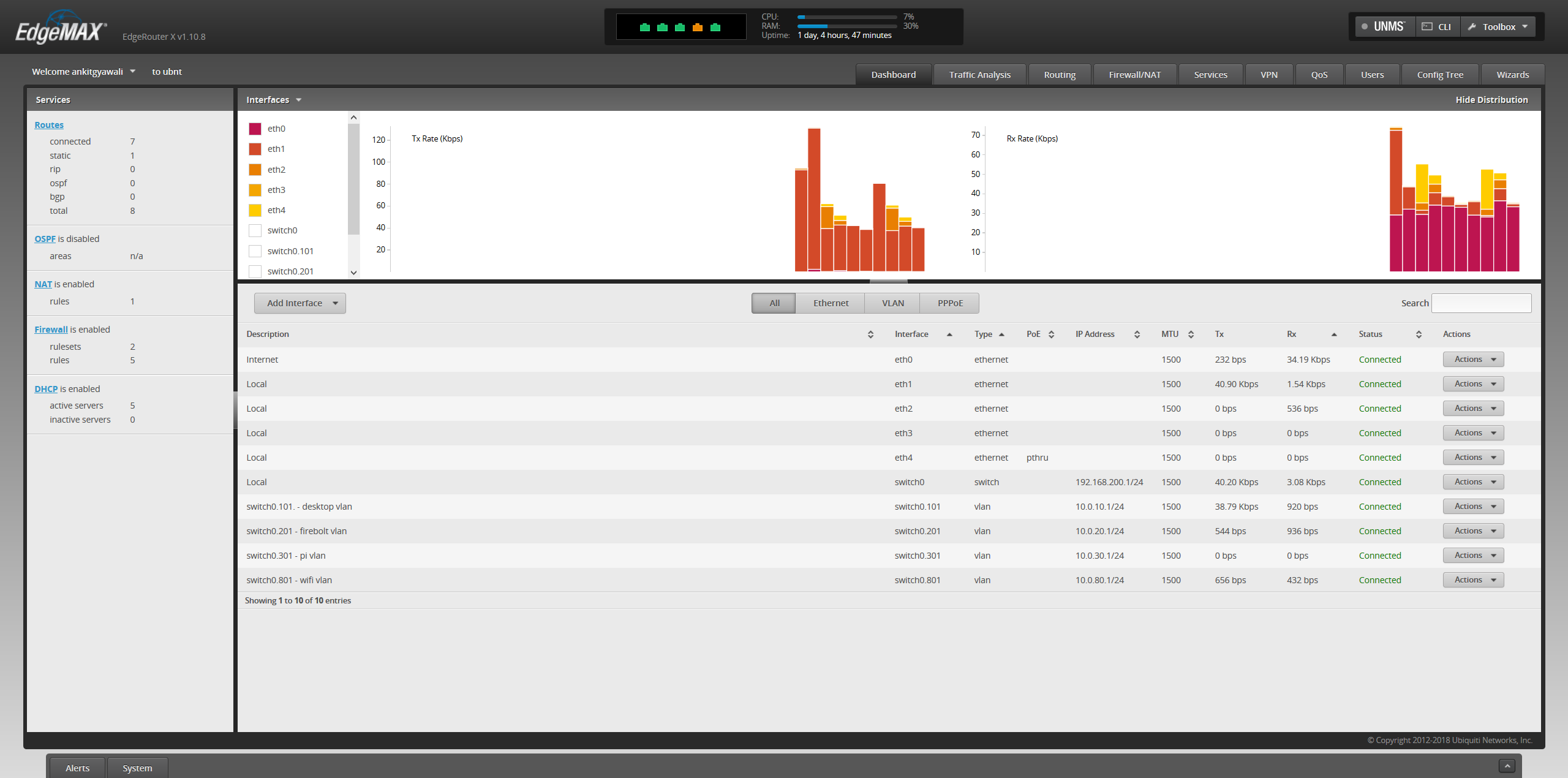

a. Setup an internal switch via EdgeRouter ans assign it to a separate internal VLAN.

b. Setup 4 separate subnets within that VLAN (one dedicated for each physical eth-n port). Create a DHCP Server for each subnet so each servers hands out IP on 4 different ip cidr block.

| Subnet | Description |

|---|---|

| 10.0.10.0/24 | Connected to my Primary Workstation with full access to other subnets for management. |

| 10.0.20.0/24 | Dedicated Port to be connected to home lab powering devices. The devices on this subnet can potentially consequently be connected to a virtual router interface like pfSense as more "bare metals" are added. |

| 10.0.30.0/24 | Dedicated port for ingress from WAN connected raspberry pi webserver. The web server listens on 443 & port 81 for egress traffics. Adblocking Pi.hole is setup on port 80. |

| 10.0.80.0/24 | Connected to Wireless AP to hand out IP to wireless devices. |

c. Setup dedicated AP SSIDs via Unifi Controller portal, one for IoT, one as guest network and final with access to internal home network.

| SSID | Type |

|---|---|

| forbidden-forest | Private Network with no internet access. |

| hogwarts | Private Network with internet access. |

| hogsmeade | Guest network with access to internet but no access to internal network. |

d. Setup nginx on raspberry pi to act as primary ingress webserver that can selectively whitelist ip for stuff trying to access internal network port forwards via internet.

e. Setup pihole on raspberry pi to act as primary dns server. The pi's /etc/host can additionally be modified to add aliases we do not want on the EdgeRouter but can be kept on pi.

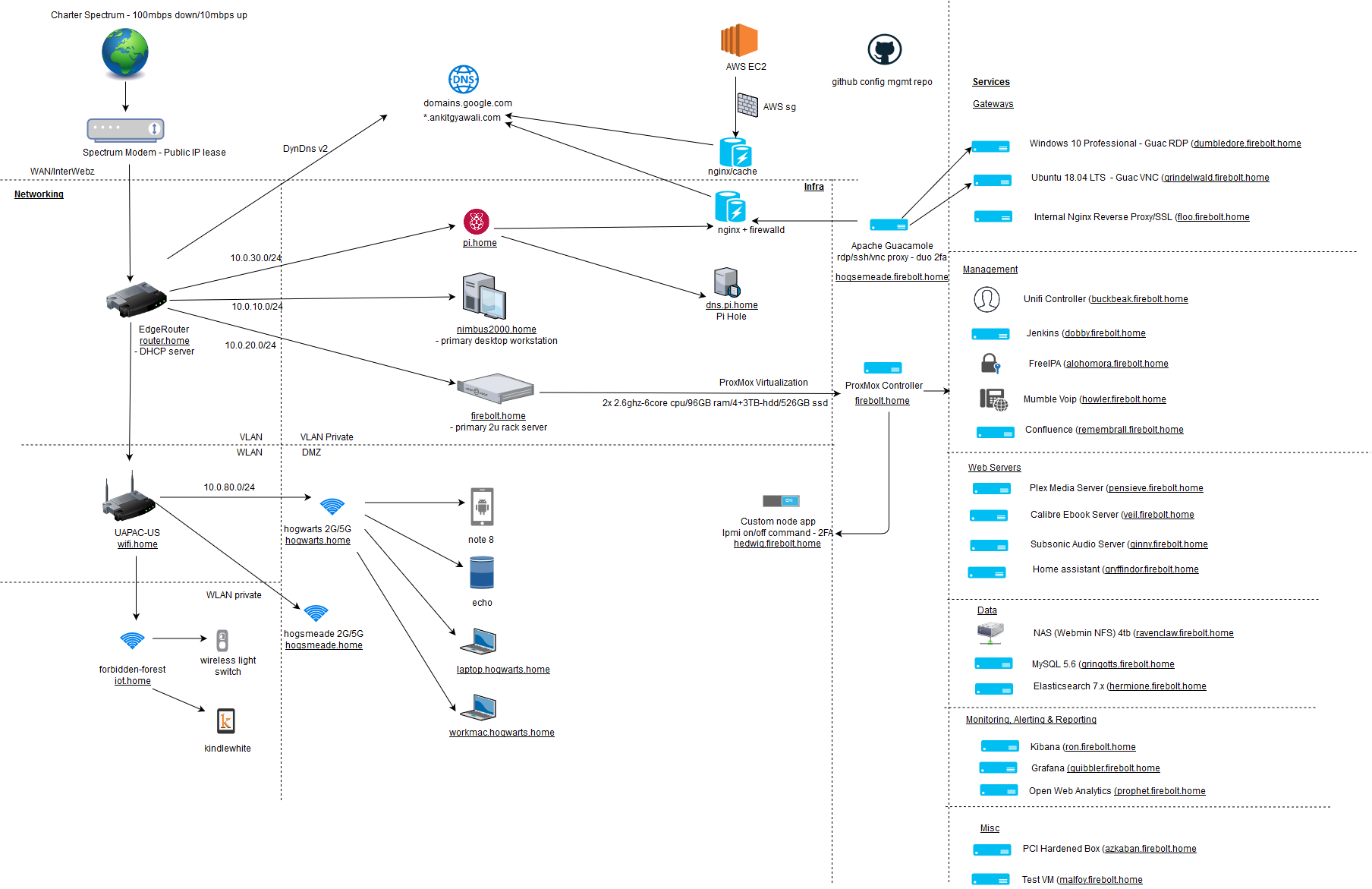

Section B: Infrastructure & Services Setup

Devices:

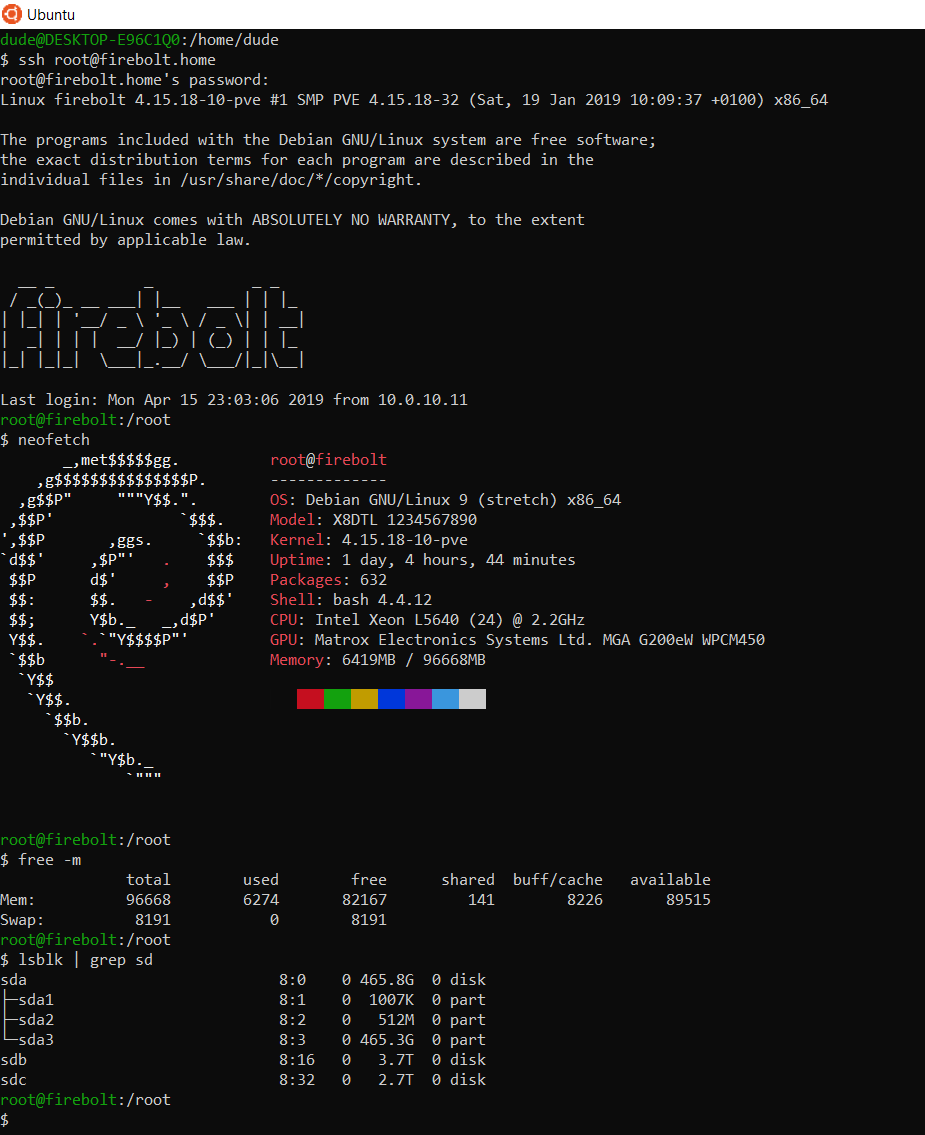

- Main Baremetal Server - After great results from my first attempt at building a workstation, I decided to bulid my own baremetal server from scratch. The end result was quite satisfactory, as I managed to build a server with great specification, low noise and low power draw for almost one third of the price of similarly spec'd heavier, noiser, more power hungry Dell R720. The specializations are:

- SuperMicro X8DTL-IF Dual LGA1366 Motherboard

- 2x Intel Xeon 5640 CPU - 12 total cores

- 8x 16GB RAM - 96GB Total RAM

- 1x 4 TB HDD & 1x 3TB HDD - 7TB Total Storage

- 512 GB SSD - To speed up boot times of base baremetal proxmox OS & home directory of VMs/LXCs.

- Raspberry Pi Web Server - This is a simple raspberry pi with a clear case and 32 GB sd card. It simply acts a nginx reverse proxy server & a pi hole dns server. The reliance on SD cards as storage has been found to be questionable as the best according to other user's experience in the forums so the intention is to replace or complement it with an Intel NUC in the future.

Execution:

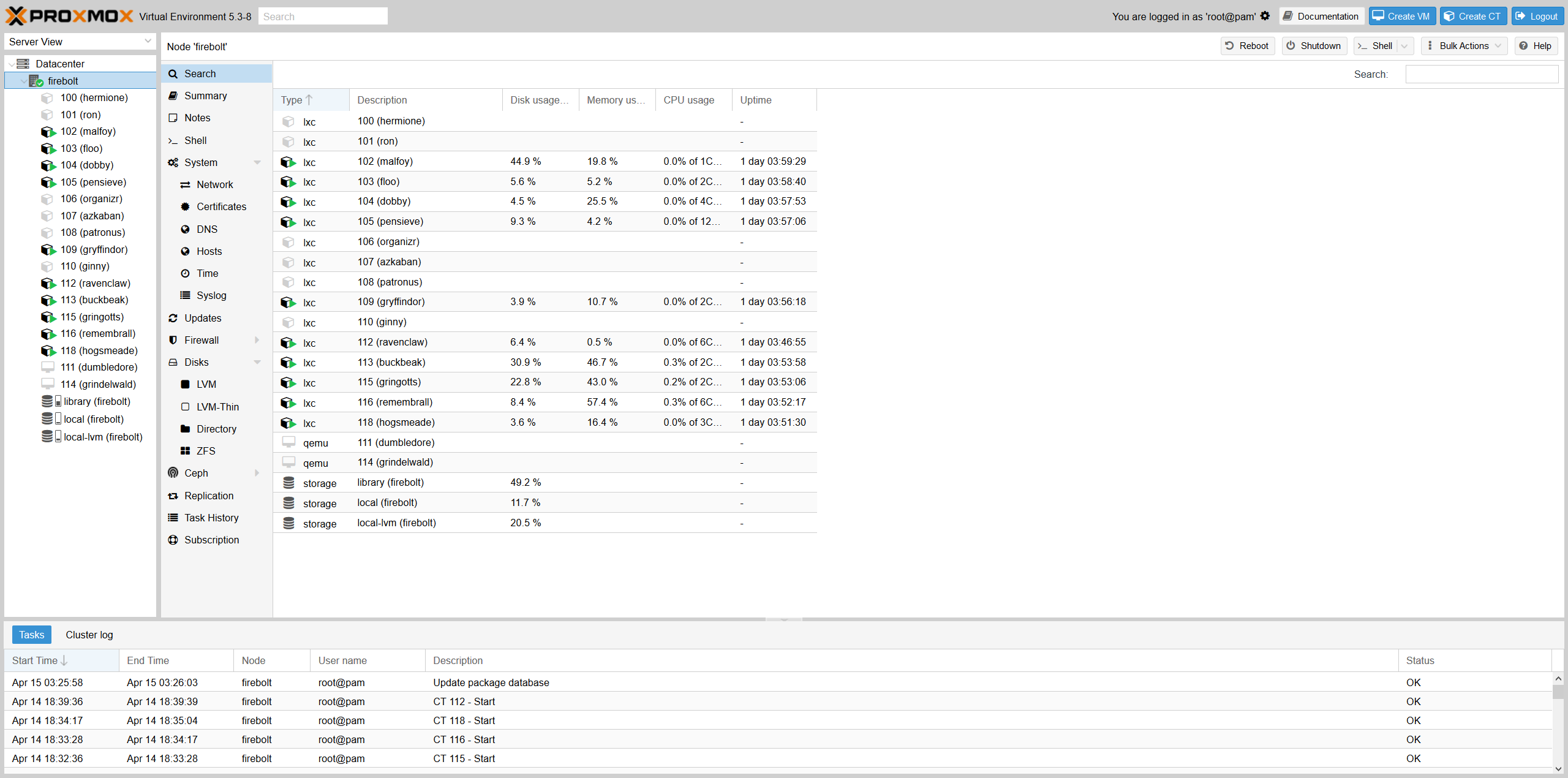

There are a whole slew of baremetal OS available with some commonly used options being EsXI, Proxmox, UnRaid or possibly even Rancher OS. I chose to go with Proxmox because of it's underlying linux OS. Not only did it support lightweight template LXC containers, it has solid support for VMs and Windows virtualization. The proxmox controller is completely managed via Web UI. The LXCs and VMs can also be managed and configured via command line tools of the base debian operating system. There are various lxc templates available, however my most frequently used one was CentOS (~60Mb) for its small image size. Unfortunately, I decided to refrain from further virtualization with docker because of LXC's already light weight nature. If a switch to kubernetes was to be made - it could be in the form of dedicated VMs on proxmox node & similarly dedicated VMs on other nodes with different bare metal container.

There are two primary VMs & rest of LXCs setup on my first proxmox node. The service have been given affectionate Harry Potter codenames!

The services are divided into following class of containers:

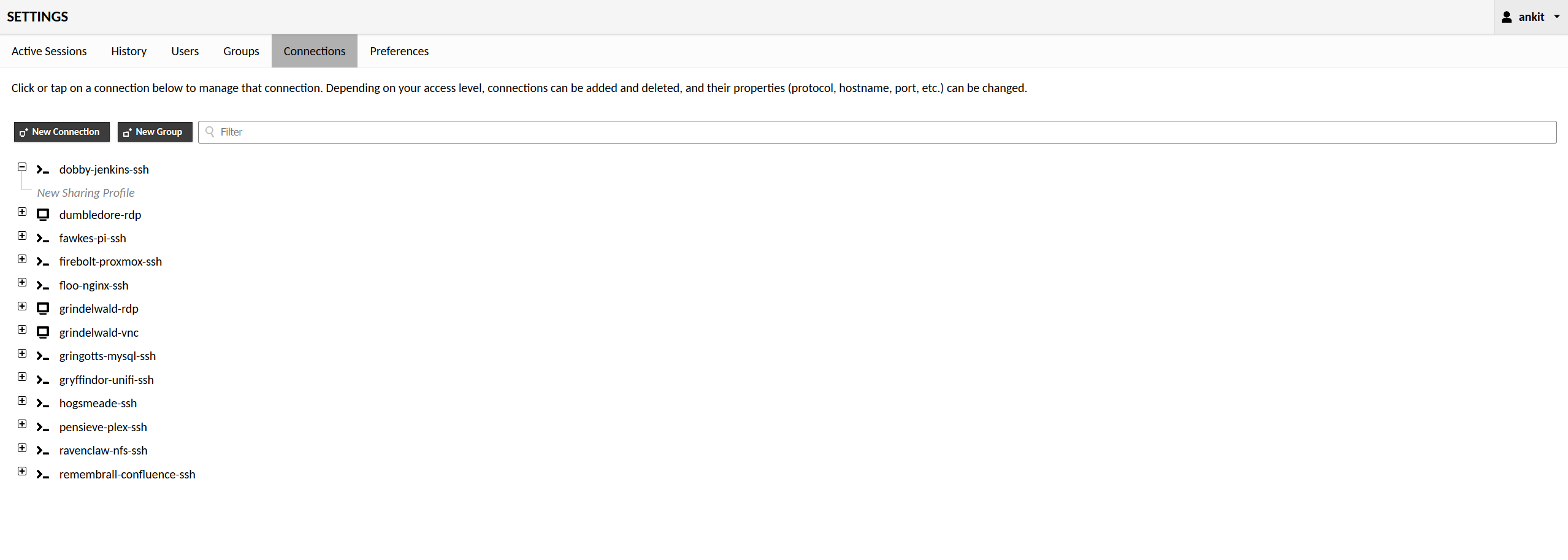

- Gateway - VMs with GUIs and acting as "jumpboxes" to consume services from internal network via Guacamole across internet.

- Networking - Containers managing internal networking.

- Management - LXCs used to manage internal services. Various patching, deployment scripts have been implemented as part of these services.

- Core - Core services containers furnished by other classes of containers.

- Data - Data storage related services. Has capability to ingest data from cloud over ssl.

- Monitoring - Monotring, Alerting & Reporting related services.

- Miscellaneous - Extra test related Containers.

| Service Name | Description | Codename | Class |

|---|---|---|---|

| Windows 10 Professional | This is setup as one of the two primary RDP server that is proxyed via Apache Guacamole to consume my services across WAN. | Dumbledore | Gateway |

| Ubuntu 18.04 LTS | This is the second linux server with GUI desktop (GNOME with Mac Sierra skin). The VNC is proxyed via Apache Guacamole to consume services across WAN. | Grindlewald | Gateway |

| Apache Guacamole | This is the the primary service exposed to WAN and is secured by at transport layer via nginx auth, secured with application password and Duo Authentication for second factor. It acts as proxy to safely forward to all my services across SSH/RDP & VNC protocols. This allows me to safely access my services with guaranteed encryption without having to expose primary bare metal controller, or VMs directly. | Hogsmeade | Gateway |

| Nginx Sever | This VM is responsible for encforcing SSL within internal network with self signed CA. A naming convention is enforced for consistency across VMs. <code-name>.firebolt.home (eg: hogsmeade.firebolt.home) maps to the Proxyed service via nginx with SSL and <code-name>.home (eg: hogsmeade.home) maps directly to the internal IP in 10.0.20.0/24 ip range. |

Floo | Networking |

| Unifi Controller | Buckbeak hosts the main control portal to configure the UAP AC Wireless Access Point. Besides monitoring stats, it provides settings to configure various SSIDs. | Buckbeak | Networking |

| Jenkins | The jenkins server has various jobs configured such as mounting nfs on the media server, selectively starting & stopping certain containers based on various desired "profiles", deploying out custom patches consistently across all VMs etc. | Dobby | Management |

| FreeIPA | This is still an exploratory SSO server at the moment. | Alohomora | Management |

| Mumble VoIP | An audio server to allow communication using Mumble client. | Howler | Management |

| Confluence | Self hosted confluence page with lifetime license & Draw IO plugin to draw and document anything based on spaces. Provides option to add 10 upto users with the basic license. | Remembrall | Management |

| Plex Media Server | Main TV series, Movie and Audio streaming server configured with Plex Pass. | Pensieve | Core |

| Emby | Backup media server. | Patronous | Core |

| Calibre Ebook Server | Calibre server with nfs mount containing 100k+ e-book dumps in primarily pdf & epub format. | Veil | Core |

| Subsonic Audio Server | Backup audio & audiobook server. | Ginny | Core |

| Home Assistant Server | Home assistant server to control IoT devices on internal network such as smart switch & alexa skills. | Gryffindor | Core |

| NAS Sever | Basic NFS server with webmin installed to be controlled via Web Browser. | Ravenclaw | Data |

| MySQL Server | MySQL server with extendable LVM attached for database needs across various services (grafana, guacamole, confluence, calibre etc). | Gringotts | Data |

| Elasticsearch | Single node elasticsearch 7 server. | Hermione | Data |

| Kibana | Kibana server to complement elasticsearch server. | Ron | Monitoring |

| Grafana | Grafana server to be hooked with elasticsearch & mysql server to levarage alerting engine. Possible eventual integration with Prometheus server. | Quibbler | Monitoring |

| Open Web Analytics | Test analytics server to replicate cloud analytics data. | Prophet | Monitoring |

| Hardened Box | Test Tool box with security tools. | Azkaban | Miscellaneous |

| Test VM | Small CentOS box to test stuff. | Malfoy | Miscellaneous |

Section C: Security Setup

The security aspect of my network was the most lengthy part of the execution. This was an unexpected outcome when compared with my initial time allocation as the project progressed. The problem around security required a lot more organization that I had initially realized.

Internal networking is completely managed by Edgerouter at this point (no virtualization with pfSense yet).

Each subnet are isolated via UNMS control panel that interacts with EdgeOS on EdgeRouter.

Connection between devices are resolved via internal DNS provided by EdgeRouter. EdgeRouter subsequently forwards uncached DNS requests to Pi Hole so ads can get selectively blocked out across the entire network.

Only two services have been exposed to the internet from homenetwork. Both encrypted via nginx & at application layer. And a final second factor with Duo Security.

- First service is a custom node page that executes static ipmi command to turn the main firebolt server on & off remotely.

- Second service exposes the Apache Guacamole client end point reverse proxyed via nginx with Let's encrypt SSL. The Guacamole client subsequently encrypts the communication protocols and provides access to main RDP/SSH services in the internal network that has the ability to access non-hardened stuff in the internal network.

Certain VMs have extra firewalld and second factor authentication added.

Conclusion:

The project has been a great experience trying to mimic an enterprise grade network setup using open source options.

Following are the project extension ideas:

- Extend my homelab setup with a secondary Intel NUC as additional node. Use EsXi instead of Proxmox on the second node.

- Add an extra layer of network virtualization using PfSense and Linux Bridge. This will give me an option to switch easily from EdgeRouter to other device if I choose to do so in the future.

- Completely refactor the services setup to use Rancher 2/Kubernetes to make my platform bare metal controller agnostic. This would mean a switch from LXC templates to Docker Images. The provisioning would look vastly different with approach of pre building docker images and storing them instead of applying bash scripts on base LXC templates.

Screenshots

Base Server:

Proxmox Home:

Apache Guacamole:

Edgerouter: